Backup and cloud storage company Backblaze has published data comparing the long-term reliability of solid-state storage drives and traditional spinning hard drives in its data center. Based on data collected since the company began using SSDs as boot drives in late 2018, Backblaze cloud storage evangelist Andy Klein published a report yesterday showing that the company's SSDs are failing at a much lower rate than its HDDs as the drives age.

Backblaze has published drive failure statistics (and related commentary) for years now; the hard drive-focused reports observe the behavior of tens of thousands of data storage and boot drives across most major manufacturers. The reports are comprehensive enough that we can draw at least some conclusions about which companies make the most (and least) reliable drives.

The sample size for this SSD data is much smaller, both in the number and variety of drives tested—they're mostly 2.5-inch drives from Crucial, Seagate, and Dell, with little representation of Western Digital/SanDisk and no data from Samsung drives at all. This makes the data less useful for comparing relative reliability between companies, but it can still be useful for comparing the overall reliability of hard drives to the reliability of SSDs doing the same work.

Backblaze uses SSDs as boot drives for its servers rather than data storage, and its data compares these drives to HDDs that were also being used as boot drives. The company says these drives handle the storage of logs, temporary files, SMART stats, and other data in addition to booting—they're not writing terabytes of data every day, but they're not just sitting there doing nothing once the server has booted, either.

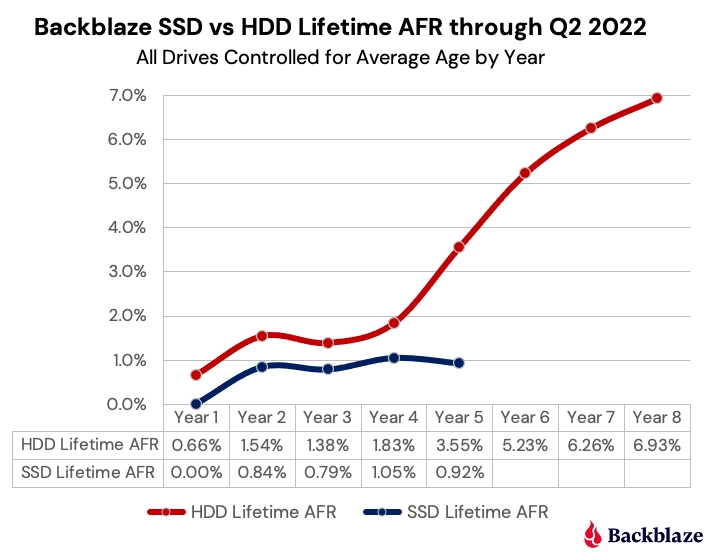

Over their first four years of service, SSDs fail at a lower rate than HDDs overall, but the curve looks basically the same—few failures in year one, a jump in year two, a small decline in year three, and another increase in year four. But once you hit year five, HDD failure rates begin going upward quickly—jumping from a 1.83 percent failure rate in year four to 3.55 percent in year five. Backblaze's SSDs, on the other hand, continued to fail at roughly the same 1 percent rate as they did the year before.

This data—both the reliability gap between them and the fact that HDDs start to sputter out sooner than SSDs—makes intuitive sense. All else being equal, you'd expect a drive with a bunch of moving parts to have more points of failure than one with no moving parts. But it's still interesting to see that case made with data from thousands of drives over a few years of use.

Klein speculates that the SSDs "could hit the wall" and begin failing at higher rates as their NAND flash chips wear out. If that were the case, you'd see the lower-capacity drives begin to fail at a higher rate than higher-capacity drives since a drive with more NAND has a higher write tolerance. You'd also likely see a lot of these drives start to fail around the same time since they're all doing similar work. Home users who are constantly creating, editing, and moving around large multi-gigabyte files could also see their drives wear out faster than they do in Backblaze's usage scenario.

For anyone who would like to poke at the raw data Backblaze uses to generate its reports, the company makes it available for download here.

reader comments

174